H-LLM MULTI-MODEL

H-LLM MULTI-MODEL

The reliability layer for AI that makes trust measurable across every model.

AI hallucinations—false and misleading outputs—erode trust in language models.

Hallucinations.cloud detects, compares, and verifies responses from multiple AI systems so users can rely on what they read.

Learn Why Accuracy Matters

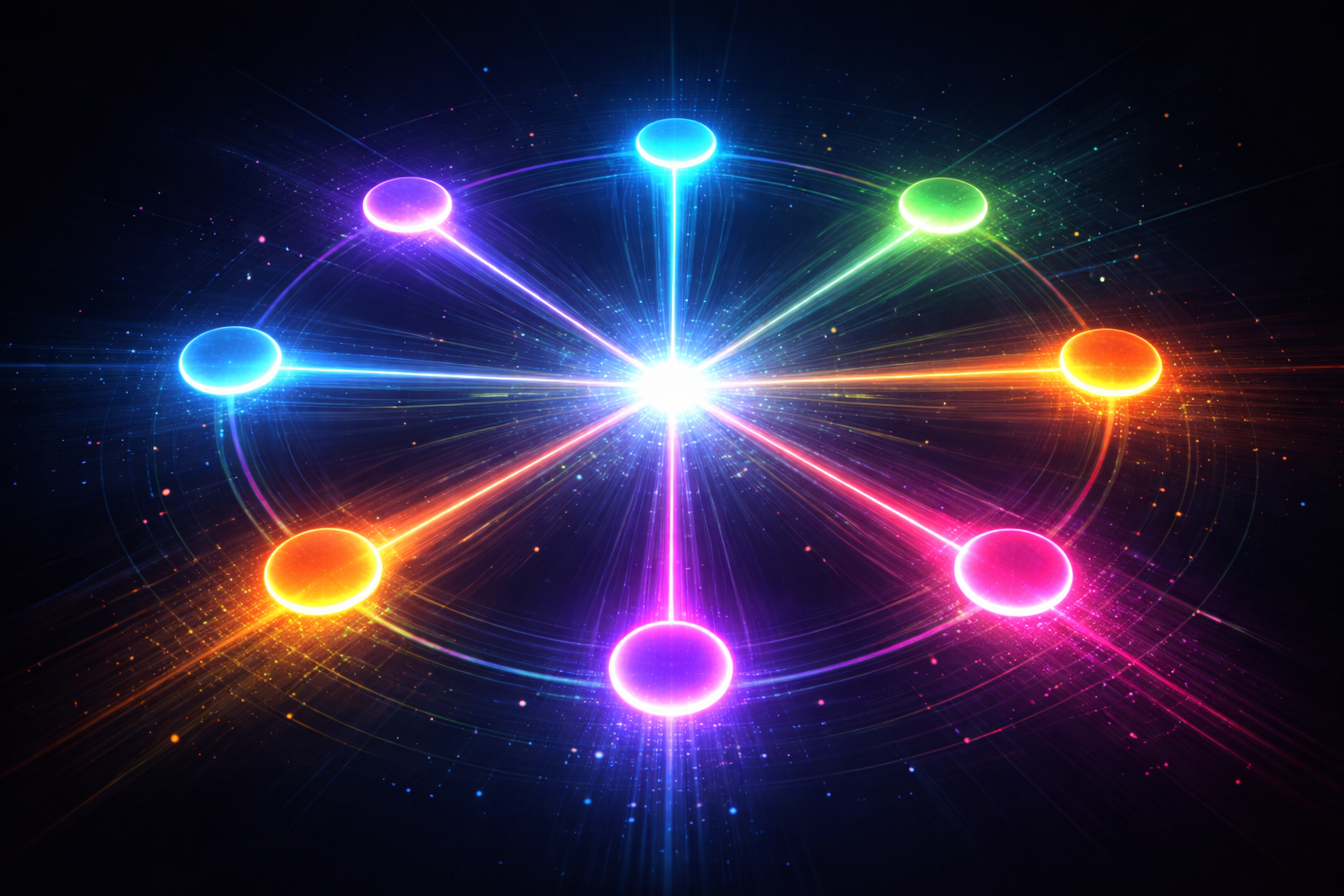

Hallucinations.cloud connects to eight leading AI models—GPT-4o, Claude, Gemini, Grok, Cohere, DeepSeek, OpenRouter, and Perplexity—to analyze outputs in real time. Contradictions and inconsistencies are detected instantly.

Compare responses from eight AI models simultaneously to identify inconsistencies and contradictions.

Automatically flag when AI models disagree, helping you identify potential hallucinations.

Submit queries in multiple languages and receive verified responses across all supported models.

Ask a question, compare AI responses, and view your live reliability score.

Secure, moderated testing environment.

Hallucinations.cloud is built for teams that rely on accurate AI data. The platform supports integrations, API access, and enterprise-grade analytics.

"AI does not need more power. It needs more truth."— Brian Demsey, Founder of Hallucinations.cloud

Professional analysis of how hallucinations impact business operations...

What organizations need to know about AI verification...

Reflections on truth, technology, and the human condition...

A personal essay on verification and skepticism...